The latest edition of the journal ‘Distance Education’ is focused on the use of learning analytics:

the aim of this special themed issue…is to foster scientific debate…to understand how learning analytics can lead to an understanding of learning and teaching processes while (re)invigorating the quality of online and distance education.

This is an important topic, and since this is not an open access journal, I will do several posts focused on this issue for those that don’t want to or can’t pay a subscription to Taylor and Francis. Nevertheless if you are at all interested in any of my summaries, please go to the original source.

I start with a look at the use of learning analytics to examine course design issues at the UK Open University.

Holmes, W. et al (2019) Learning analytics for learning design in online distance education, Distance Education, Vol. 40, No.3

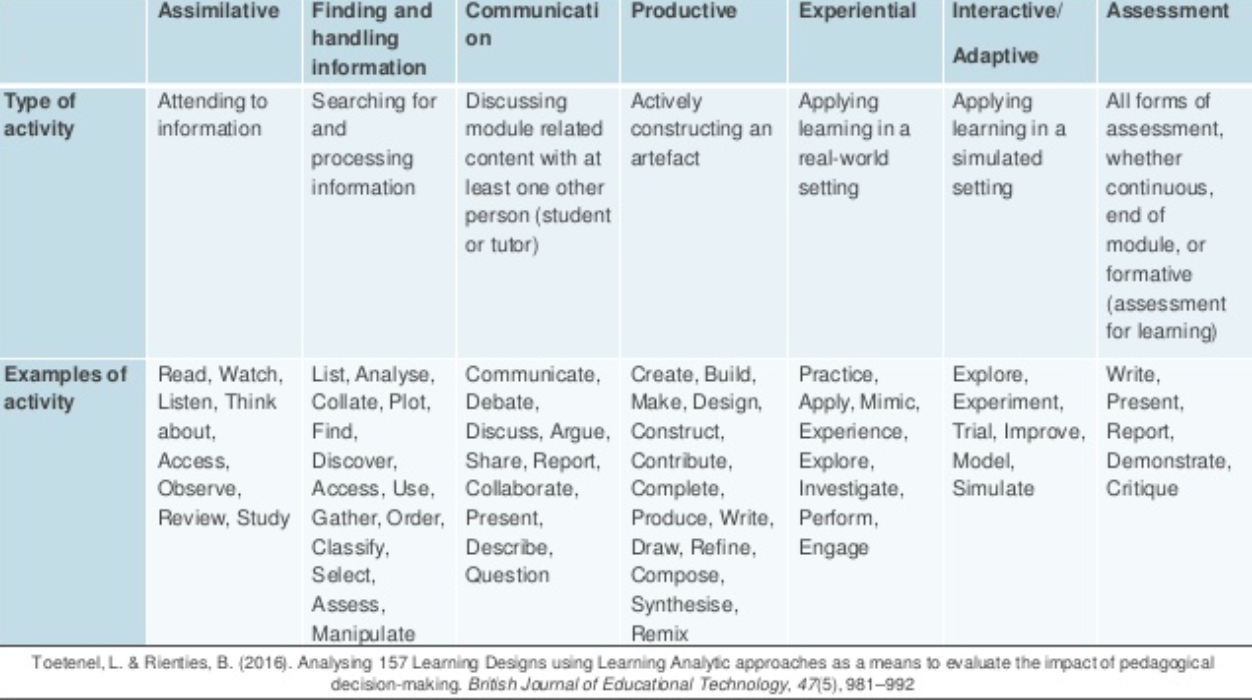

This article reports on an imaginative application of learning analytics to learning design at the UK Open University. The OU has specified that in the design of its online modules (courses), seven different kinds of student learning activities should be included:

- assimilative: (reading, watching, listening)

- knowledge management: finding, analysing, gathering information

- communication: discussion, collaboration, presenting

- productive: creating, constructing, designing an artefact

- experiential: practising, applying, exploring

- interactive: experimenting, modelling, simulating

- assessment: writing, demonstrating, critiquing, reporting

For more on the OU’s Learning Design Initiative, see Cross et al. (2012).

Holmes and colleagues examined 55 learning modules (courses) using learning design at the OU, and using network analysis ‘clustered’ the combinations of the seven learning activities, resulting in roughly six different clusters of learning activity combinations across the 55 learning modules.

They then looked at three measures of student activity for nearly 50,000 students studying these 55 modules. The three measures were:

- student use of the VLE (LMS), in terms of time spent by each student on the VLE

- student pass rates on the module

- student end-of-module satisfaction score.

The analysis looked for correlations between the different clusters of designed activities and each of the three independent measures (use of VLE; pass rates; and student satisfaction).

Unfortunately, although the study raises a number of extremely interesting questions about learning design, the results of applying learning analytics in this case are underwhelming, since there were no significant correlations with any of the independent student measurements. In other words there was no evidence that different clusters of student activities had any measurable effect on student behaviour.

What the study did find though raises a lot of questions about the OU’s learning design process:

- the OU’s learning design rubric makes no recommendation regarding priorities for the seven activities;

- however, most modules are heavy on instructional (instructor-directed) activities such as assimilation (reading, or viewing videos) and assessment, and are relatively light on the more learner-centred activities such as production and experience;

- quantifying different learning activities is problematic: time spent on an activity is a crude measurement of learning effectiveness

- VLE trace behaviour is also a poor measure of actual student learning activity.

In other words, even with a large base of learning designs (55) and learners (nearly 50,000) the study failed to find any correlations that would explain student success or failure related to different learning designs. The most valuable part of the study was identifying how learning design is actually applied at the OU. It seems that it is as difficult at the OU as elsewhere to get away from the traditional focus on content delivery assimilation.

However, in terms of learning analytics identifying optimum learning design models, the researchers came out empty-handed. For more on the use of learning analyics at the Open University see Learning analytics, student satisfaction, and student performance at the UK Open University

Up next

Learning analytics, personality traits and learning outcomes.

Dr. Tony Bates is the author of eleven books in the field of online learning and distance education. He has provided consulting services specializing in training in the planning and management of online learning and distance education, working with over 40 organizations in 25 countries. Tony is a Research Associate with Contact North | Contact Nord, Ontario’s Distance Education & Training Network.

Dr. Tony Bates is the author of eleven books in the field of online learning and distance education. He has provided consulting services specializing in training in the planning and management of online learning and distance education, working with over 40 organizations in 25 countries. Tony is a Research Associate with Contact North | Contact Nord, Ontario’s Distance Education & Training Network.